What Happens When We Trust the Box Without Opening It?

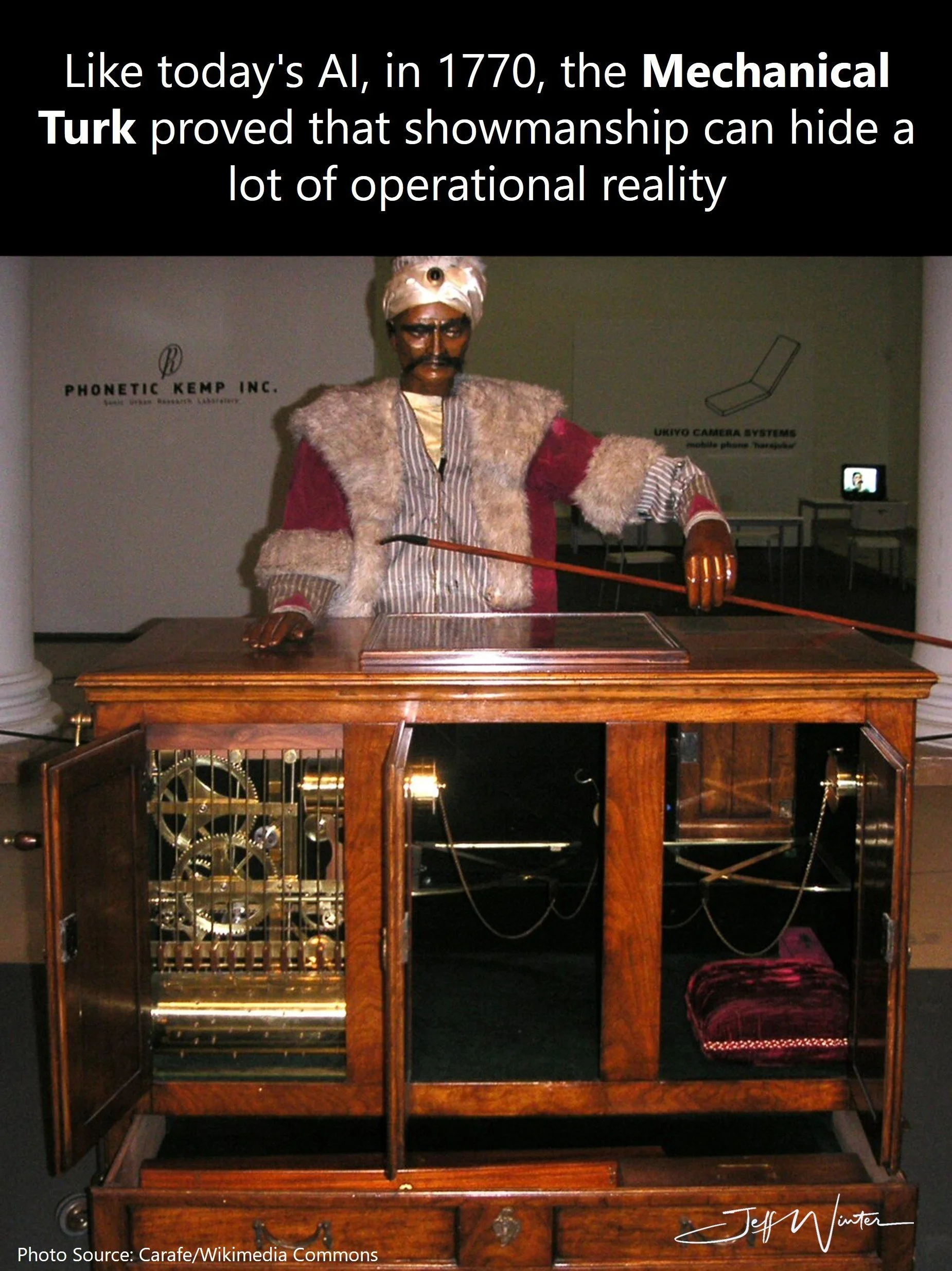

In the late 1700s, Wolfgang von Kempelen introduced something Europe had never seen: The Mechanical Turk, a so-called automated chess-playing machine. It wasn’t just a party trick, it was a sensation. The Turk beat human opponents with startling regularity, earning the admiration of royalty and the fascination of thinkers across the continent.

But the magic was a lie. Hidden inside the ornate box was a cramped, overheated compartment. Inside that box sat a skilled chess player, guiding the automaton’s every move. The crowd saw a machine winning. But it was a person all along.

It wasn’t artificial intelligence. It was artfully hidden intelligence.

And history, it seems, is repeating itself.

We’ve Just Upgraded the Costume

Today’s equivalent of the Turk wears a different costume. It’s not carved wood and Turkish robes, it’s sleek dashboards, animated workflows, and the label “AI-powered” slapped on every other slide deck.

From generative AI to machine learning platforms to autonomous optimization engines, we’re seeing a modern performance unfold. The tools promise speed, foresight, and self-sufficiency. The demos dazzle. The language is technical. The vision is seductive.

But pull back the curtain and you’ll often find something more familiar than futuristic:

Human experts manually tagging training data

Analysts tweaking prompts or hardcoding fallback logic

Engineers reformatting files and resolving schema mismatches

Models only working inside carefully curated scenarios

It’s not that these systems don’t work. Many do. The issue is that we’re mistaking assisted automation for autonomous intelligence, and that’s where things get murky.

The Performance vs. the Process

This is where the conversation gets more important… and more uncomfortable. Because performance without understanding is a trap.

We tend to equate intelligence with outcome. If the recommendation engine seems accurate, it must be smart. If the model predicted the defect, it must be advanced. But intelligence isn’t just about the result. It’s about how that result was reached, under what conditions, and with what fragility.

When leaders see AI systems working “as expected,” there’s a tendency to stop asking questions. But what if the system is performing well only under narrow circumstances? What if it’s relying on assumptions that no longer hold true? What if the insight you’re acting on isn’t an insight at all, but a statistical fluke?

In manufacturing, this can be especially risky. A forecasting model that worked perfectly last quarter might fall apart with one supplier delay. A machine vision system might flag defects reliably, until the lighting changes or the part orientation shifts. And when no one knows how the system actually works, no one knows how to fix it when it breaks.

Worse, we often scale these systems faster than we validate them. One promising pilot becomes five sites, then ten, then a global rollout, based more on excitement than robustness. But when the underlying processes are brittle, more scale just means more failure, faster.

Performance should prompt inspection, not celebration. The better a system seems to work, the more we owe it to ourselves to understand exactly why, and when, it works. Because once AI becomes embedded in decision-making, the cost of being wrong multiplies. Quickly.

Manufacturing’s Favorite Illusion

No industry is immune to this illusion, but manufacturing is especially vulnerable. There's immense pressure to modernize, digitize, and “compete on data.” So naturally, the siren song of AI is strong.

From predictive maintenance to smart scheduling, nearly every solution on the market claims to be AI-enhanced. But intelligence isn’t what’s being sold. What’s being sold is the appearance of intelligence. Many of these systems still require constant oversight. They generate outputs, but not understanding. They trigger actions, but not clarity.

And yet, these tools are shaping real decisions: what to make, where to ship, when to change over lines. Decisions that affect throughput, quality, and customer satisfaction. If those decisions are being driven by systems we don’t truly understand, we’re not leading a digital transformation. We’re outsourcing critical thinking to a black box.

Start With Better Questions

So how do we fix this? We stop asking, “Does it use AI?” and start asking, “How does it work?” That means getting curious about the training data. Digging into how models handle edge cases. Asking how often a human steps in. Understanding what “autonomous” really means in a given context.

This doesn’t mean distrusting AI. It means putting it on a level playing field with every other system in your operation. You wouldn’t buy a press without understanding its tolerances. You wouldn’t deploy a new ERP without knowing how it maps to your business logic. AI should be no different.

The better your questions, the better your implementation. When you understand the limitations, you can design the workflows, alerts, and human handoffs needed to make AI effective, not just impressive.

The lesson from The Mechanical Turk isn’t to reject automation. It’s to be vigilant about illusion. To resist being dazzled by interfaces and instead ask what’s beneath them.

AI will play an essential role in the future of manufacturing. It already is. But the companies that benefit the most won’t be the ones with the most models or the flashiest dashboards. They’ll be the ones who took the time to understand what’s really powering their systems, where the automation stops, and the human work begins.

Because in the end, it’s not about whether you have AI.

It’s about whether you understand it.

References

Interesting Engineering - The Turk: Wolfgang von Kempelen’s Fake Automaton Chess Player, June 2025: https://interestingengineering.com/innovation/the-turk-fake-automaton-chess-player