From Science Project to Global Operating System: How AI Transformed Between 2023 and 2025

Artificial intelligence didn’t just grow up between 2023 and 2025, it graduated with honors, joined the workforce, ran for office (sort of), and started co-authoring Nobel-winning research. If 2022 was the “wow, it writes poems” era of AI, then the next three years were its full-blown metamorphosis into a global infrastructure, one reshaping industries, policies, research, and public expectations at a staggering pace.

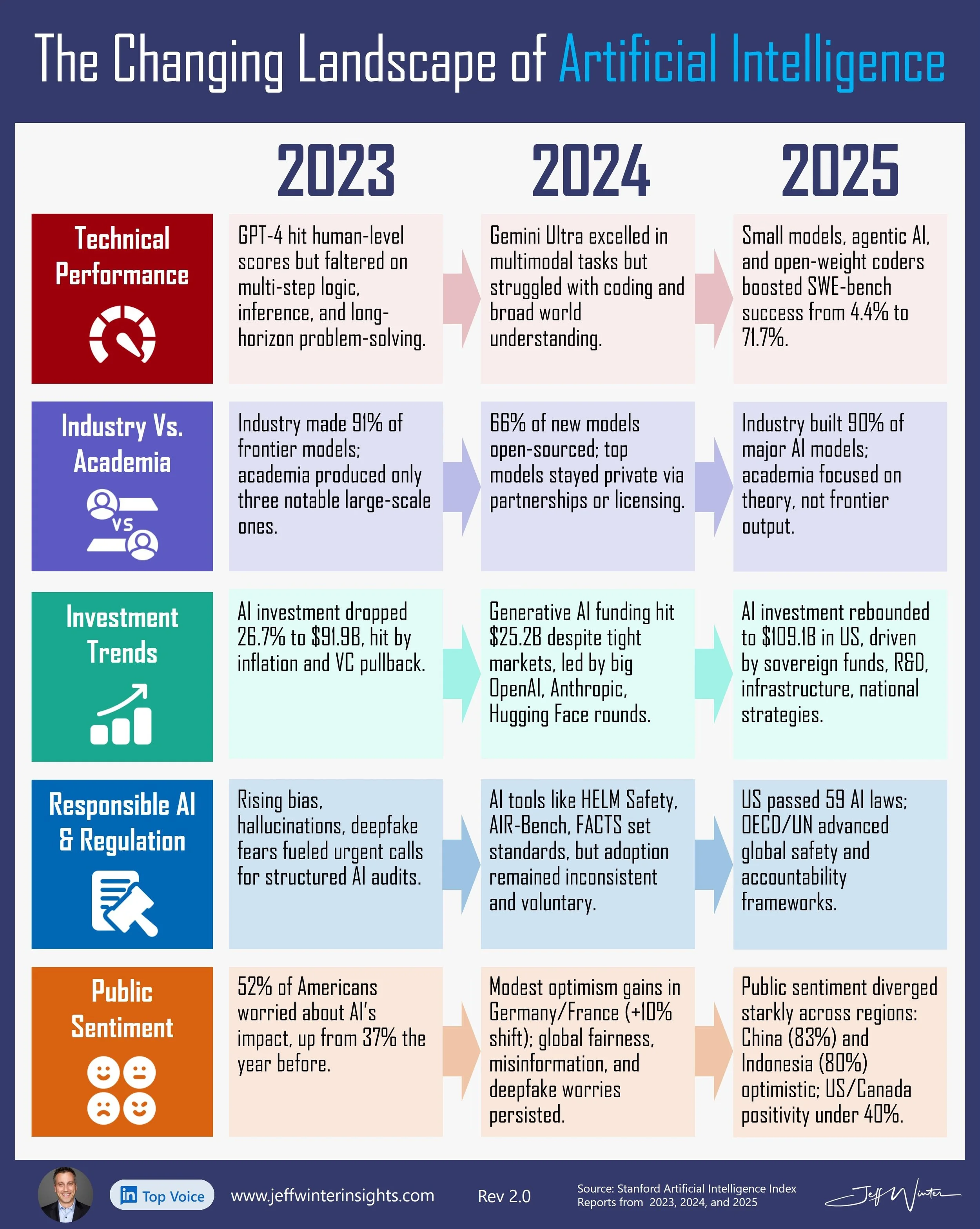

To track this whirlwind evolution, we turn to the annual Stanford AI Index Reports from 2023, 2024, and 2025. Together, they provide a dense and detailed timeline of how AI grew smarter, richer, more regulated, and more controversial. Below, we unpack the five most critical changes—from benchmark-crushing models to billion-dollar funding frenzies and the mounting existential questions they sparked.

1. Technical Performance: From Flashy Outputs to Functional Intelligence

In 2023, AI wowed the world with large language models like GPT-4, which finally achieved human-level (and in some cases, superhuman) performance on multiple standardized tests such as MMLU and HELM. Yet, under the surface, weaknesses persisted. These systems remained brittle, struggled with complex multi-step reasoning, and often hallucinated or contradicted themselves under even mildly adversarial conditions (2023 report, p. 2). They were capable, yes, but not yet reliable.

By 2024, AI’s capabilities deepened. The introduction of new benchmarks such as SWE-bench (focused on software engineering), GPQA (graduate-level professional QA), and MMMU (multimodal multitask understanding) reflected a shift in how we evaluated “intelligence.” No longer was it enough to answer trivia; models had to reason, plan, and operate across modalities. While AI showed progress on these tougher tasks, it often faltered under real-world complexity (2024 report, p. 15).

Then came 2025—and the results were astonishing. SWE-bench accuracy, for instance, jumped from a meager 4.4% in 2023 to a commanding 71.7% by 2025 (2025 report, p. 3). The era of general-purpose AI agents began taking shape. AgentBench and similar frameworks showed AI systems navigating simulated environments, solving multi-part tasks, and adapting dynamically. Suddenly, AI wasn’t just generating polished text—it was engaging in reasoning loops, performing surgeries in virtual labs, and co-piloting software development.

It’s not just that AI became more capable. It became useful.

2. Industry vs. Academia: The Power Gap Widens

While academia built the intellectual scaffolding for modern AI, it’s become increasingly sidelined in actual model development. In 2023, a stunning 91% of notable models came from industry players like OpenAI, Google DeepMind, Anthropic, and Meta. Academia produced just three significant frontier-scale models—barely a footnote in the era of billion-dollar compute clusters (2023 report, p. 1).

In 2024, that gap only widened. A record 149 foundation models were released globally, and roughly 66% were open-source, suggesting greater accessibility and collaboration. But here’s the catch: the most capable models—like Gemini Ultra—remained proprietary. They were often accessible only through commercial APIs, hidden behind legal walls and rate limits (2024 report, p. 14). Open weights may have been increasing, but not necessarily open power.

By 2025, industry accounted for nearly 90% of all top-performing models. Academia continued to lead in foundational research and paper citations, but when it came to training and deploying real-world, state-of-the-art systems, the private sector called the shots. The compute gap, along with specialized engineering teams and capital reserves, put academic institutions permanently on the back foot (2025 report, p. 3).

This dynamic has triggered fierce debates about AI equity, scientific reproducibility, and who gets to define the trajectory of a technology that affects everyone.

3. Investment Trends: From Market Jitters to Megabucks

2023 was a cold shower for AI investors. After a decade of feverish growth, private investment dropped 26.7% to $91.9 billion worldwide. The economic slowdown, higher interest rates, and VC belt-tightening hit early-stage AI startups hard. Even promising companies faced longer fundraising cycles and tougher questions from wary investors (2023 report, p. 7).

But that gloomy chapter was short-lived. In 2024, generative AI proved to be the exception to the downturn. Investment in this subfield skyrocketed to $25.2 billion, thanks to massive fundraising rounds by OpenAI, Anthropic, Hugging Face, and Inflection. Suddenly, it wasn’t just about training models—it was about building entire ecosystems around them (2024 report, p. 5).

By 2025, AI investment didn’t just recover—it surged to historic highs. The U.S. alone saw $109.1 billion in private AI investment, driven by sovereign AI strategies, cloud infrastructure projects, chip development, and cross-sector applications. AI wasn’t just a hot sector—it was now a foundational layer of digital economies worldwide (2025 report, p. 3).

Investors finally understood: this wasn’t a hype cycle. This was a paradigm shift.

4. Responsible AI and Ethics: From Talking Points to Policy Mandates

In 2023, AI ethics was mostly discussed in panels and PowerPoint decks, not product launches. Deepfakes disrupted elections, LLMs spewed toxic outputs, and companies promised "safety teams" without real accountability. The field lacked standardized frameworks or reporting tools, and even major players rarely disclosed how they tested model risks (2023 report, p. 5).

2024 brought a wave of new tools and benchmarks. HELM Safety, AIR-Bench, and FACTS emerged to evaluate things like hallucination rates, bias, and factual accuracy. But these tools remained underused, with adoption limited and mostly voluntary. Comparing models on ethical grounds was like comparing apples to unregulated pears (2024 report, p. 5).

Then 2025 hit like a regulatory tsunami. In the U.S., 59 AI-related regulations were passed, more than double the prior year’s record. Globally, the OECD, the U.N., and other coalitions pushed comprehensive frameworks that emphasized transparency, fairness, explainability, and red-team testing. Compliance stopped being optional. Responsible AI had finally moved from philosophy to law (2025 report, p. 3).

5. Public Perception: Trust Issues and Global Disparities

Public trust in AI began eroding in 2023. In the U.S., Pew reported that 52% of citizens were more concerned than excited about AI—a sharp increase from just 37% the year before. The spike reflected fears about job loss, misinformation, and the rising influence of AI in decision-making (2023 report, p. 10).

By 2024, the mood was mixed. Optimism rose by about 10% in countries like Germany and France, driven in part by education initiatives and transparent applications. Yet, fears around deepfakes, surveillance, and biased algorithms continued to dominate headlines globally (2024 report, p. 6).

In 2025, the perception chasm widened. In China, 83% of respondents expressed optimism about AI’s future, followed closely by Indonesia and Thailand. Meanwhile, only 39% of Americans and 40% of Canadians shared that view. Cultural values, media framing, and local policy responses all shaped wildly different public experiences of the same technology (2025 report, p. 4).

Final Thought

AI’s evolution from 2023 to 2025 wasn’t linear. It was exponential, messy, and riddled with contradictions. It shattered benchmarks and entrenched inequalities. It unlocked life-saving science and triggered existential fears. It attracted billions in funding and billions in side-eyes. In just three years, AI transitioned from a promising tool to a global operating system.

The question now isn’t whether AI will change the world. It already has.

The question is: who gets to shape what happens next?

References:

Stanford University HAI - Artificial Intelligence Index Report 2023: https://hai.stanford.edu/ai-index/2023-ai-index-report

Stanford University HAI - Artificial Intelligence Index Report 2024: https://hai.stanford.edu/ai-index/2024-ai-index-report

Stanford University HAI - Artificial Intelligence Index Report 2025: https://hai.stanford.edu/ai-index/2025-ai-index-report