The Move from Industry 3.0 to 4.0 in Baby Steps

Original Publication June 10th, 2025 Updated January 2nd, 2026

Let’s clear something up right away: when I talk about Industry 4.0, I’m not describing a finish line.

High-resolution 3-page summary

Version 2.1

Let’s clear something up right away. When I talk about Industry 4.0, I am not describing a finish line.

I am not talking about a shiny badge of technological achievement that only a handful of elite manufacturers can earn. I am talking about a living, breathing era. The one we are all in right now. An era shaped by accelerating technology, shifting business models, and rising expectations for agility, sustainability, and resilience.

At the same time, Industry 4.0 is also a vision. A north star for digital transformation. Full automation. Intelligent systems. Adaptive supply chains. Deeply integrated cyber-physical environments.

That dual identity matters. Industry 4.0 is both a present reality and a future aspiration. And that is exactly what makes it powerful. It is also what makes it confusing for so many organizations.

Here is the trap. When Industry 4.0 is framed as one massive leap, it becomes paralyzing. Too big. Too complex. Too abstract. It starts to feel binary, like you either have it or you do not.

That mindset stalls progress.

Which is why I stopped thinking about Industry 4.0 as a leap and started thinking about it as a series of deliberate steps; baby steps.

Not a Maturity Model. Not a Framework. Just Progress.

These “baby steps” are not meant to be a rigid checklist or a formal maturity model. Think of them as conceptual mile markers. A way to break a massive, fuzzy ambition into something more tangible and practical.

Each step represents a cluster of capabilities and initiatives that people already associate with Industry 4.0. The difference is that instead of bundling everything into one overwhelming transformation, we break it apart. Each part delivers real business value. Each part requires its own technical investments. Each part introduces new business requirements, from process design to training to change management.

You can think of these steps as different levers you can pull or different journeys you can pursue. Some are sequential and build directly on prior progress. Others can happen in parallel. And in many organizations, some of these steps already exist, even if others are missing.

Here is the key idea. These steps are not mutually exclusive and they are not exhaustive. They are directional. They are flexible. And most importantly, they are designed to spark action and meaningful conversation.

Why These Steps Matter

What makes these baby steps so powerful is how they shift the mindset from “Industry 4.0 or bust” to “progress over perfection.” Instead of asking, “Are we doing Industry 4.0 yet?” manufacturers can ask:

What value are we currently unlocking from our digital initiatives?

Where are we stuck and why?

Which capabilities do we already have, and which ones are next?

Do we have the technical AND business readiness to move forward?

This shift in perspective helps avoid the all-or-nothing trap. It allows organizations to align initiatives with business priorities, resource constraints, and their unique starting point. It encourages strategic planning instead of reactive tech buying. And it opens up room for experimentation, iteration, and learning along the way.

Your Journey. Your Destination.

Here’s the fun part: there’s no universal definition of what “done” looks like.

For some companies, the goal might be lights-out manufacturing. For others, it’s complete data-driven traceability. Others still might focus on flexible operations, better worker enablement, or integration across global operations.

The point of the baby steps isn’t to dictate your destination. It’s to give you a map, and maybe even a little courage to keep moving forward. To show you that you don’t need to leap straight to cyber-physical utopia. You can start with one capability, one initiative, one step. And each step, no matter how small, gets you closer to your own version of Industry 4.0.

So no… Industry 4.0 isn’t a finish line. But it also isn’t a fantasy. It’s a journey of real, measurable progress. And it starts with one baby step.

Industry 3.0: Full Automation

The rise of Industry 3.0 marked a fundamental shift in how work, productivity, and human involvement were defined in manufacturing. It was not just about adding machines. It was about handing over control. This was the dawn of full automation. Not simple mechanization, but the deliberate design of systems that could operate continuously, precisely, and often without people. Repetitive, hazardous, and precision critical tasks moved to PLCs, CNC machines, and robotics. Humans shifted from doers to overseers.

It is important to make one distinction. While Industry 3.0 originally described a historical era, here it represents a state of capability. Achieving Industry 3.0 does not mean being stuck in the past. It means fully realizing what was possible at the time. Mature automation. Digital controls. Integrated, standardized, highly repeatable systems. Not partial adoption, but a complete implementation of the twentieth century vision.

That maturity came with tradeoffs. Automation delivered efficiency, but it also introduced rigidity. Systems were hard coded. Change was expensive. Adaptability was sacrificed in favor of consistency. Machines could run all day, but they could not easily flex in real time. So when we talk about doing Industry 3.0, we are assuming the foundation is solid. Processes are largely automated. Utilization is high. Human error is reduced. The environment is stable.

But the world has moved on. And competitiveness now depends on moving beyond it.

Value Gained:

At its core, full automation delivered the dream of operational excellence at scale.

Efficiency: Machines operate continuously. They don’t take breaks, call in sick, or need shift changes. Processes could be fine-tuned to reduce waste and increase overall equipment effectiveness (OEE).

Speed: Automated lines drastically shortened cycle times. High-speed assembly, material handling, and inspection could now happen in parallel and without pause.

Accuracy: Repeatability became the default. Whether drilling, welding, or filling. Automated systems could perform tasks with microscopic tolerances for millions of cycles without deviation.

Cost Reduction Over Time: Though capital-intensive up front, full automation brought down cost per unit over the long run by minimizing labor costs, reducing rework, and decreasing scrap.

Safety: Machines replaced humans in hazardous tasks (welding, lifting, exposure to chemicals, etc.) making factories safer and reducing incidents.

Consistent Quality: With human variability out of the loop, defect rates plummeted and product quality became more predictable.

Technical Requirements:

Getting to full automation meant building a robust, tightly integrated technical stack capable of executing with both speed and precision.

Industrial Robots: These machines handled repetitive, precision-heavy, or dangerous tasks. They dominated in areas like welding, pick-and-place, painting, packaging, and inspection. Designed for speed and durability, they transformed productivity on high-volume lines.

PLCs (Programmable Logic Controllers): The brains of industrial control systems. PLCs executed logic with millisecond-level precision to manage sequences, interlocks, machine states, and safety logic across the plant.

SCADA (Supervisory Control and Data Acquisition) Systems: Provided centralized control and monitoring. SCADA allowed operators to visualize processes, respond to alarms, and ensure that entire systems were functioning as designed.

Sensors & Actuators: Essential for feeding real-time data to machines and executing mechanical responses. From position sensors and limit switches to motors and solenoids, this layer enabled the system to “sense and respond.”

Hardwired Industrial Networks: Unlike today’s flexible wireless infrastructure, these systems relied on deterministic, hardwired connections, ensuring real-time, uninterrupted communication between devices and controllers.

Business Requirements:

The technical achievements of Industry 3.0 would have fallen apart without a corresponding shift in how businesses operated. Full automation required companies to think, and behave, with engineering-level discipline.

Standardized Processes: For machines to take over, every step had to be defined, optimized, and frozen. Processes needed to be stripped of ambiguity, and variability had to be minimized at the source.

Detailed Work Instructions and Engineering Specs: The logic behind each automated task had to be programmed, meaning upstream design and documentation had to be airtight. Vague process flows or tribal knowledge simply didn’t work.

Skilled Maintenance and Engineering Teams: While less labor was needed to run the lines, more was required behind the scenes. This includes technicians, programmers, and engineers to install, calibrate, and maintain complex systems.

Investment in CapEx Planning: Full automation came with high up-front costs. Business cases had to account for ROI horizons, productivity gains, and long-term operational savings.

Industry 3.1: Connected Operations

IIndustry 3.0 gave us machines that could run without us. Industry 3.1 begins the work of making those machines work together.

Full automation delivered precision, consistency, and uptime, but it also created a new problem. Operational silos. Machines performed their tasks exceptionally well, but largely in isolation. There was little coordination across systems, no shared context, and no meaningful feedback loop. You could run fast, but you could not steer with agility. That gap is exactly what Industry 3.1 addresses.

Industry 3.1 is the connective layer between the deterministic automation of Industry 3.0 and the data driven intelligence of Industry 4.0. It does not replace automation. It orchestrates it. The goal is connected operations, where machines, systems, and devices communicate in real time. Not just passing signals, but exchanging meaningful data that can influence decisions, shift priorities, and adjust processes dynamically.

This is no longer just about making things go. It is about making them go together. Production stops being a collection of isolated steps and starts behaving like a coordinated system. A synchronized flow of interconnected processes, guided by real time visibility into what is happening, where it is happening, and what needs to happen next.

Value Gained:

Connected operations unlock the ability to see what’s happening as it’s happening, and to respond accordingly. Instead of relying on post-shift reports or siloed control systems, organizations gain a dynamic view of operations that is shared across teams and functions.

Coordination across assets and teams becomes fluid. When one machine signals a slowdown, upstream and downstream systems can adjust accordingly.

Scheduling becomes responsive. Work orders can be dynamically reordered, production plans adjusted, and exceptions managed in real time.

Downtime and waste decrease as machines and systems no longer operate in ignorance of each other.

Data starts to flow horizontally, not just vertically. It links maintenance, quality, and production around a shared, live picture of performance.

Technical Requirements:

Connecting operations isn’t just about adding cables or routers, but instead it’s about engineering a responsive, resilient, and interoperable ecosystem. This layer of technology acts as the digital nervous system of the plant.

Machine-to-Machine (M2M) Network Infrastructure: Enables devices, sensors, and machines to share real-time data. This could be over protocols like OPC UA, MQTT, or industrial Ethernet, depending on latency and reliability needs.

Wired and Wireless Communication Technologies: High-throughput Ethernet for fixed assets, industrial Wi-Fi or private 5G for mobile or retrofit applications. The goal is seamless, uninterrupted connectivity.

Gateways and Protocol Translators: Legacy machines don’t naturally speak modern languages. Gateways help bridge old and new equipment, enabling backward compatibility without replacement.

Edge Computing Devices: These process and filter data locally, reducing network load and enabling faster reactions. They can run simple analytics, detect anomalies, or buffer data during outages.

Business Requirements:

While the tech enables communication, it’s the business side that must define what gets shared, how, and why. Connected operations require more than good hardware, they demand alignment across functions, disciplines, and mindsets.

Tight Integration Between IT and OT: IT manages the networks, cybersecurity, and protocols. OT understands the machines, processes, and constraints. Without collaboration, connectivity becomes chaos.

Standardized Communication Protocols and Data Models: Systems need a shared “language.” Standardizing how machines report status, alarms, and performance ensures clean, usable data across all levels.

Cybersecurity Preparedness: More connections mean more risk. Manufacturers must develop and enforce policies for access control, network segmentation, and threat detection to secure their new digital pathways.

Governance and Responsiveness: With live data comes the need for live decision-making. Organizations must rethink their escalation paths, empower frontline staff to act on insights, and cultivate a culture of real-time responsiveness.

Industry 3.2: Digitized Assets

While connected operations in Industry 3.1 gave machines the ability to talk to each other, Industry 3.2 is where manufacturing finally starts listening. Consistently. Comprehensively. And in context. This is the point where signals turn into records and events turn into knowledge.

This is also the moment manufacturing begins to leave the paper era behind. Greasy clipboards fade away. Handwritten logs disappear. Whiteboards covered in magnets and marker ink stop being the system of record. In their place emerges structured, real time, digital data. Data that is captured at the source. Data that is continuously updated. Data that is accessible to everyone who needs it, not just the person standing closest to the machine.

It is important to remember that connectivity alone does not equal digitization. Being connected simply allows data to move. It does not ensure that the data is captured correctly, standardized, contextualized, or stored in a way that makes it useful. Many plants believe they are digital because their machines are connected, yet critical information still lives in spreadsheets, notebooks, and tribal knowledge. Industry 3.2 closes that gap.

Digitized assets turn scattered signals into reliable operational truth. They create a consistent record of what happened, when it happened, and under what conditions. This is where traceability becomes possible at scale. This is where compliance becomes easier instead of harder. And this is where operations gain the foundation needed for faster root cause analysis and more confident decision making.

Industry 3.2 shifts manufacturing from reactive to proactive management. Problems no longer have to be discovered after the fact. Trends become visible as they emerge. Decisions become grounded in evidence rather than intuition. It is the digital nervous system not just gaining connectivity, but gaining memory.

Value Gained:

The first and most obvious value of digitization is eliminating paper. No more transcribing shift notes. No more manually entering measurements after the fact. Every event, check, and action is logged at the point of activity, automatically or through guided digital inputs.

Human error is slashed: Transcription mistakes, forgotten entries, and ambiguous handwriting disappear.

Processes accelerate: Data entry happens in parallel with the work itself, not after the fact.

Real-time visibility is grounded in fact: Dashboards and alerts are no longer driven by assumptions or estimates, they reflect actual system state.

Compliance becomes built-in: Digital records with timestamps, user IDs, and validations eliminate the gaps auditors love to find.

Operations become data-generating by design: Every workflow becomes an input into broader analytics, root cause analysis, and continuous improvement initiatives.

This is the moment you stop operating in the dark. You don’t just see what’s happening—you create a permanent, accurate, and useful record of it.

Technical Requirements:

Digitizing assets requires a layered digital infrastructure—not just to capture data, but to ensure that data is complete, contextualized, and reliable. This is where software and hardware meet to form the digital fabric of modern operations.

MES (Manufacturing Execution Systems): These serve as the hub for collecting real-time production data. They also manage workflows, operator interfaces, quality checks, and job tracking, digitizing both human and machine inputs.

Digital Forms and Checklists: Whether via tablets, touchscreens, or mobile devices, forms replace paper-based procedures. Work instructions, signoffs, and inspections happen digitally, and are validated in real time.

Data Historians and Databases: Structured storage is critical. This is where time-series data (e.g. from sensors) and transactional data (e.g. production events) are archived for reporting and analysis.

Sensor and Machine Data Integration: Machines begin to feed live process parameters (temperature, torque, cycle time, etc.) directly into the digital stack, allowing for context-rich digital records.

User Interfaces and Edge Devices: The user experience matters. Tablets, HMIs, and digital kiosks must be fast, intuitive, and resilient enough for industrial environments.

Business Requirements:

Digitized assets aren’t useful on their own. They require human understanding and engagement to unlock their full value. This is where many digital initiatives stall: the systems are in place, but the people aren’t prepared to use them.

Training in Data Interpretation: Operators, technicians, and team leads must understand the meaning behind digital signals. What does an out-of-range value mean? How should trends be read? What requires intervention? Building this literacy ensures data drives action.

Ownership of Data Quality: Those entering data, via digital forms or operator inputs, must be accountable for its accuracy and completeness. This includes understanding why precision matters and how their input contributes to bigger decisions.

Consistent Workflows and Standard Operating Procedures (SOPs): In a digital system, variation causes errors. Workflows must be clearly defined and enforced to ensure consistency in how data is collected, validated, and used.

Industry 3.3: Business Systems Integration

Up to this point, Industry 3.x has been largely focused on the factory itself. Making machines talk. Making processes visible. Making data digital. But none of this exists in isolation. Every product produced, every decision made, and every disruption felt on the shop floor is connected to broader business functions like finance, supply chain, quality, customer service, and product development. What happens on the floor ultimately shows up in margins, forecasts, customer commitments, and brand reputation.

Industry 3.3 is where those two worlds finally converge. This is where operational data stops being trapped locally and begins flowing upstream into the enterprise. Execution data feeds ERP systems. Quality events inform QMS platforms in near real time. Production realities influence planning, inventory, and customer promises. The factory is no longer a black box at the edge of the business. It becomes a fully participating node in the enterprise ecosystem.

This is the moment where plant level execution becomes enterprise aligned. MES no longer operates as a standalone system focused only on throughput and yield. It exchanges context with ERP, maintenance, quality, planning, and supply chain systems. The result is a business that responds faster and plans more accurately. Forecasts improve because they are grounded in reality. Financials reflect what actually happened, not what was assumed. Leaders gain confidence that decisions are based on truth, not stale or incomplete information.

But this only works if integration goes beyond simple transactions. Pushing data from one system to another is not enough. Integration must be semantic. Systems need shared definitions, shared context, and shared understanding of what the data represents. A work order, a defect, a unit of production, or a delay must mean the same thing everywhere it appears.

This is where many organizations struggle. They are technically integrated, but conceptually misaligned. Industry 3.3 closes that gap. It creates a common operational language across the enterprise. And once that language exists, the distance between the shop floor and the boardroom shrinks dramatically. Decisions speed up. Tradeoffs become clearer. And the business starts operating as a single, connected system rather than a collection of loosely coordinated functions.

Value Gained:

Before integration, manufacturing and business functions often operated in parallel, connected in theory, but disjointed in practice. Plans were made in ERP systems and executed in isolation. Actuals were manually reconciled days or weeks later. This created delays, inaccuracies, and missed opportunities.

Production data flows in real time to enterprise systems: So scheduling, inventory management, and financial forecasting are grounded in what’s actually happening, not just what was planned.

End-to-end traceability improves: Quality issues, supply disruptions, and production delays can be traced directly to specific work orders, machines, or materials.

Decision latency shrinks: Business functions gain timely insight into operational conditions, enabling faster adjustments to plans, pricing, or customer commitments.

Reporting becomes automated and accurate: No more reconciling spreadsheets across functions. Data is aligned and shared through a common source of truth.

This is the step where operations become part of the business conversation, not just a cost center waiting to be measured.

Technical Requirements

This is one of the most strategically critical, and chronically underestimated, steps in the Industry 3.x journey. Enterprise systems like ERP, QMS, CRM, SCM, and PLM weren’t designed with real-time plant-floor data in mind. And operational systems like MES or SCADA were never meant to speak fluent SAP or Salesforce. Each was built with its own assumptions, timelines, data models, and intent.

Business Systems Integration doesn’t just mean linking platforms. It means architecting bi-directional, real-time communication across functions, so that every forecast, work order, inventory transaction, or customer promise is backed by live operational truth. This requires deep planning, clean interfaces, and a shared understanding of how business and execution actually fit together.

To build that foundation, organizations must invest in:

Bi-Directional System Interfaces: Integration must flow both ways. ERP pushes orders, materials, and schedules down; MES pushes completions, scrap, and delays back up. QMS sends NC alerts to MES; MES feeds inspection data to QMS. CRM queries delivery status; SCM adapts to real-time consumption. Systems must publish and subscribe to one another continuously and not just exchange files at shift end.

Transaction Mapping and Reconciliation Logic: One work order in ERP might generate hundreds of events in MES. Those need to reconcile cleanly and consistently. This includes being aggregated, rolled up, and validated across both systems. This includes handling partial completions, split batches, substitutions, and last-minute adjustments without breaking planning logic or historical traceability.

Cross-System Entity Matching and Master Data Alignment: A product, machine, or supplier must be recognized identically across systems. That requires shared master data models, global identifiers, and lookup logic that translates between naming conventions and data schemas. Without this, integration creates confusion instead of clarity.

Process-Aware Integration Orchestration: It’s not enough to move data, you must preserve process logic. If a nonconformance triggers a quality hold, that must cascade into MES, inventory management, and scheduling workflows. This requires orchestration engines or business rule layers that can route, validate, and prioritize events based on business logic.

System-Specific Connectors and Interface Adapters: Each system exposes its own set of APIs, protocols, or data formats, including IDocs for SAP, REST APIs for Salesforce, and file drops for legacy systems. Integration layers must include translators or adapters that wrap these into clean, resilient, and monitored interfaces.

Event-Driven Messaging Architecture: Most ERP systems operate in batches; most production systems operate in real time. Bridging them requires message brokers (like MQTT or Kafka) and event streaming platforms that can decouple timing, ensure delivery, and buffer data without loss or overload.

Data Quality Assurance and Error Recovery Mechanisms: When something breaks, and it will, your integration must fail gracefully. That means retry logic, transactional rollbacks, exception queues, and alerting for humans to intervene. No black boxes, no silent failures.

Audit Trails and Data Lineage Tracking: As data flows across systems, traceability matters. Every transaction, update, and correction must be logged, timestamped, and linked back to its source system. This supports compliance, accountability, and debugging when things go sideways.

Scalable Integration Governance: As system count and complexity grow, integration cannot remain tribal knowledge. You need a centralized catalog of integrations, version-controlled logic, and standardized onboarding for new systems, supported by governance policies that define ownership, maintenance, and change control.

This isn’t just about plumbing, it’s about system-level coherence. When done right, Business Systems Integration turns departments into a connected enterprise, where business strategy flows seamlessly into execution, and execution flows back as insight. It creates the operational trust, transparency, and coordination that transformation depends on.

Business Requirements:

Business systems integration only creates value if the people and processes using those systems are aligned. That means unifying how different functions think about work, data, and success.

Training in Data Interpretation Across Functions: Planners, buyers, and customer service reps must be trained not just in reading dashboards, but in understanding what production data means for their function, and how to respond.

Shared Metrics and KPIs: Teams need a common language. Quality, cost, delivery, and performance indicators must be standardized so everyone’s pulling in the same direction.

Process Harmonization: Data from the shop floor must match how business systems interpret it. This requires aligning work orders, inventory IDs, failure codes, and more, often across departments or global sites.

Data Governance and Ownership: Integrated systems need clearly defined owners for data integrity, system performance, and change management. Without governance, integration becomes noise.

Cross-functional Collaboration Culture: Manufacturing no longer operates on its own timeline. Every update, exception, or issue can now impact finance, supply chain, or customers. That means better communication, escalation paths, and collaboration tools.

Ultimately, this step is about turning the business into a single, aligned system, not just technically, but behaviorally.

Industry 3.4: Scalable Infrastructure

ndustry 3.4 is where digital transformation either becomes sustainable or starts to break under its own weight. At this stage, the focus shifts from proving things work to ensuring they keep working as scale, complexity, and expectations increase.

This is about infrastructure decisions. Cloud versus on-prem. Network capacity and reliability. Data storage and retention. Compute availability. Integration patterns. Security and governance. These are not abstract IT topics. They determine how fast you can move, what you can add, and how much friction every new initiative introduces.

Scalable infrastructure means you can grow without re-architecting every time. Add plants without redesigning integrations. Increase data volumes without degrading performance. Introduce new analytics, AI, or optimization use cases without destabilizing operations. When infrastructure is right, expansion feels incremental. When it is wrong, every change becomes a project.

Industry 3.4 typically advances in parallel with business system integration. As MES, ERP, quality, maintenance, and supply chain systems are connected, the infrastructure must support higher data velocity, larger workloads, and stricter availability requirements. Data has to move quickly. Systems have to respond reliably. Downtime becomes unacceptable.

This is the dividing line between an architecture that enables progress and one that quietly limits it. A scalable foundation removes infrastructure as a constraint. It makes cloud optimization realistic, advanced analytics practical, and enterprise intelligence achievable. Without it, everything slows down.

Value Gained:

The real value of scalable infrastructure is that it removes constraints. It’s what allows organizations to experiment, expand, and improve without being shackled by outdated servers, legacy systems, or limited network bandwidth.

Operational flexibility: New technologies, new product lines, or entirely new sites can be brought online quickly and with minimal friction.

Faster insight-to-action loops: Real-time data can be captured, processed, and visualized without lag, enabling predictive and prescriptive tools to actually function at speed.

Support for advanced workloads: Machine learning models, digital twins, large-scale simulations, and enterprise-wide analytics all require compute and storage flexibility only scalable infrastructure can offer.

Improved cost efficiency: Cloud-native platforms allow organizations to pay for what they use, automatically scale during peaks, and avoid the cost of idle physical infrastructure.

Enterprise-wide consistency: With a shared infrastructure layer, different teams and sites can build on the same foundation, ensuring consistency in data, security, and capabilities.

Put simply, scalable infrastructure is the difference between running a smart pilot and scaling a smart operation.

Technical Requirements:

This is often one of the most difficult and underestimated stages of transformation. IT and OT systems weren’t originally designed to work together, let alone speak a shared language. OT prioritizes reliability, control, and determinism. IT focuses on flexibility, scalability, and user access. Reconciling these domains requires more than connections, it requires intentional, robust architecture that respects their differences while enabling deep interoperability.

To build that foundation, organizations must invest in:

Unified Namespace (UNS): A centralized, logical directory that organizes and exposes all data points in a way that any system (ERP, MES, SCADA, QMS) can browse, subscribe to, and interpret. It provides a single, contextualized view of the business, bridging IT and OT layers.

Standardized Semantic Data Models: These define shared meanings across systems. A downtime event must be labeled, coded, and understood the same way whether it appears in MES, CMMS, or a dashboard. Semantic alignment is critical to automation, reporting, and analysis.

Contextual Tagging and Metadata Enrichment: Every piece of data (sensor reading, transaction, event, etc.) must be wrapped with relevant context: what machine, which operator, which product, what shift. Context is what turns data into insight.

Middleware and Integration Engines (e.g., MQTT brokers, OPC UA servers, REST APIs): These tools allow data to move between systems with different architectures, security protocols, and communication standards. They normalize and translate data in real time.

Time Synchronization Across Systems: All data must align on a common time base to support accurate correlation, traceability, and analytics. That means time-stamped events, synchronized clocks, and latency-tolerant architecture.

ETL Pipelines and Data Validation Rules: Extract-Transform-Load pipelines prepare data for consumption by various systems, ensuring consistency, cleansing out anomalies, and applying logic for derived values.

Data Governance Frameworks: Integration increases data exposure. Organizations need policies to define access control, lineage tracking, and stewardship roles, ensuring quality and trust across the data lifecycle.

Scalable API Layer: Clean, documented APIs let third-party systems and internal tools query, update, and subscribe to key business and operational data without creating bottlenecks or brittle point-to-point links.

Security and Segmentation Controls: More connections mean more risk. Segmentation, encryption, access control, and zero-trust models must be in place to ensure secure, compliant integration.

This architecture doesn’t just connect data pipelines, it creates meaningful, structured, accessible insight across the business.

Business Requirements:

Business systems integration only creates value if the people and processes using those systems are aligned. That means unifying how different functions think about work, data, and success.

Training in Data Interpretation Across Functions: Planners, buyers, and customer service reps must be trained not just in reading dashboards, but in understanding what production data means for their function, and how to respond.

Shared Metrics and KPIs: Teams need a common language. Quality, cost, delivery, and performance indicators must be standardized so everyone’s pulling in the same direction.

Process Harmonization: Data from the shop floor must match how business systems interpret it. This requires aligning work orders, inventory IDs, failure codes, and more, often across departments or global sites.

Data Governance and Ownership: Integrated systems need clearly defined owners for data integrity, system performance, and change management. Without governance, integration becomes noise.

Cross-functional Collaboration Culture: Manufacturing no longer operates on its own timeline. Every update, exception, or issue can now impact finance, supply chain, or customers. That means better communication, escalation paths, and collaboration tools.

Industry 3.5: Collaborative Operations

By the time an organization reaches Industry 3.5, it’s no longer just about streamlining machines or connecting systems. It’s about empowering people, not by replacing them, but by augmenting them. Collaborative operations is the phase where humans and digital tools, robots, and immersive interfaces begin to work side by side, unlocking new levels of safety, precision, learning, and creativity.

This is where digital transformation shifts from systems and data to human experience and capability. And while it might look like the most futuristic step in the 3.x series, it’s deeply grounded in frontline needs, whether it’s reducing ergonomic strain, enabling just-in-time training, or giving workers superpowers through real-time visual assistance.

It’s not automation replacing humans. It’s automation finally collaborating with them.

Value Gained:

The core value of collaborative operations is amplification. You’re not just doing things faster, you’re doing them better, safer, and in ways that were previously impossible.

Improved productivity: Collaborative robots (cobots) handle repetitive or strenuous tasks while humans focus on precision or decision-making, reducing fatigue and improving throughput.

Increased safety: Robots designed for human interaction work alongside employees without cages or risk. This includes flagging issues, pausing for safety, or assisting with heavy loads.

Enhanced worker training and skill development: AR/VR interfaces offer immersive, on-the-job training and visual instructions, reducing ramp-up time and increasing knowledge retention.

Faster and more adaptive workflows: Operators can receive real-time guidance, error notifications, or workflow changes directly through AR overlays or wearable devices, minimizing downtime and mistakes.

Greater worker satisfaction: Empowered employees feel less like cogs in a machine and more like partners in a smarter process.

Technical Requirements:

Collaborative operations rely on a suite of human-centered technologies that are responsive, intuitive, and built for dynamic environments. These tools must integrate seamlessly into workflows, augmenting rather than disrupting human effort.

Collaborative Robotics (Cobots): Robots that can operate safely alongside humans without fencing, using built-in force sensing, vision systems, and task programming to perform actions like pick-and-place, assembly, or machine tending.

AR/VR Hardware and Interfaces:

AR headsets (e.g., HoloLens, Magic Leap) overlay digital content onto the real world, guiding workers through tasks or visualizing hidden system states.

VR headsets create immersive environments for training, simulation, or remote inspection, especially valuable in hazardous or complex environments.

AR/VR Software Platforms: Authoring tools and runtime engines (e.g., Unity, Vuforia, PTC, or custom solutions) that manage spatial awareness, track hand movements, and trigger context-specific overlays or instructions.

Edge and Wireless Connectivity: Low-latency networks (5G, Wi-Fi 6) ensure real-time data delivery to and from wearable devices, cobots, and sensors without lag or dropouts.

Sensor Fusion and Environment Mapping: Collaborative environments require systems that combine visual, tactile, and positional data to understand human presence, interpret gestures, and safely navigate shared workspaces.

Business Requirements

Collaborative operations don’t succeed through tech alone. The most powerful systems fall flat if workers don’t trust them, know how to use them, or feel like they’re being monitored instead of empowered. That’s why the organizational side is so critical here.

Human-Centered Training Programs: Teams must be trained not just on how to use new tools, but how to interact with them safely and confidently. This includes understanding the robot’s range of motion, how AR overlays function, and how to escalate issues or disengage systems.

Safety Standards and Protocols: Any human-robot collaboration requires rigorous safety design. Organizations must implement ISO-compliant protocols (e.g., ISO 10218 for robotics), conduct regular risk assessments, and ensure digital systems don't distract from situational awareness.

AR/VR Content Development Capacity: Interactive content doesn't write itself. Teams need designers, SMEs, and tools to continuously create, update, and refine AR/VR instructional materials as workflows evolve.

Change Management and Workforce Engagement: Successful adoption depends on how technology is introduced. Workers must be involved early, their feedback respected, and their insights integrated into rollout plans.

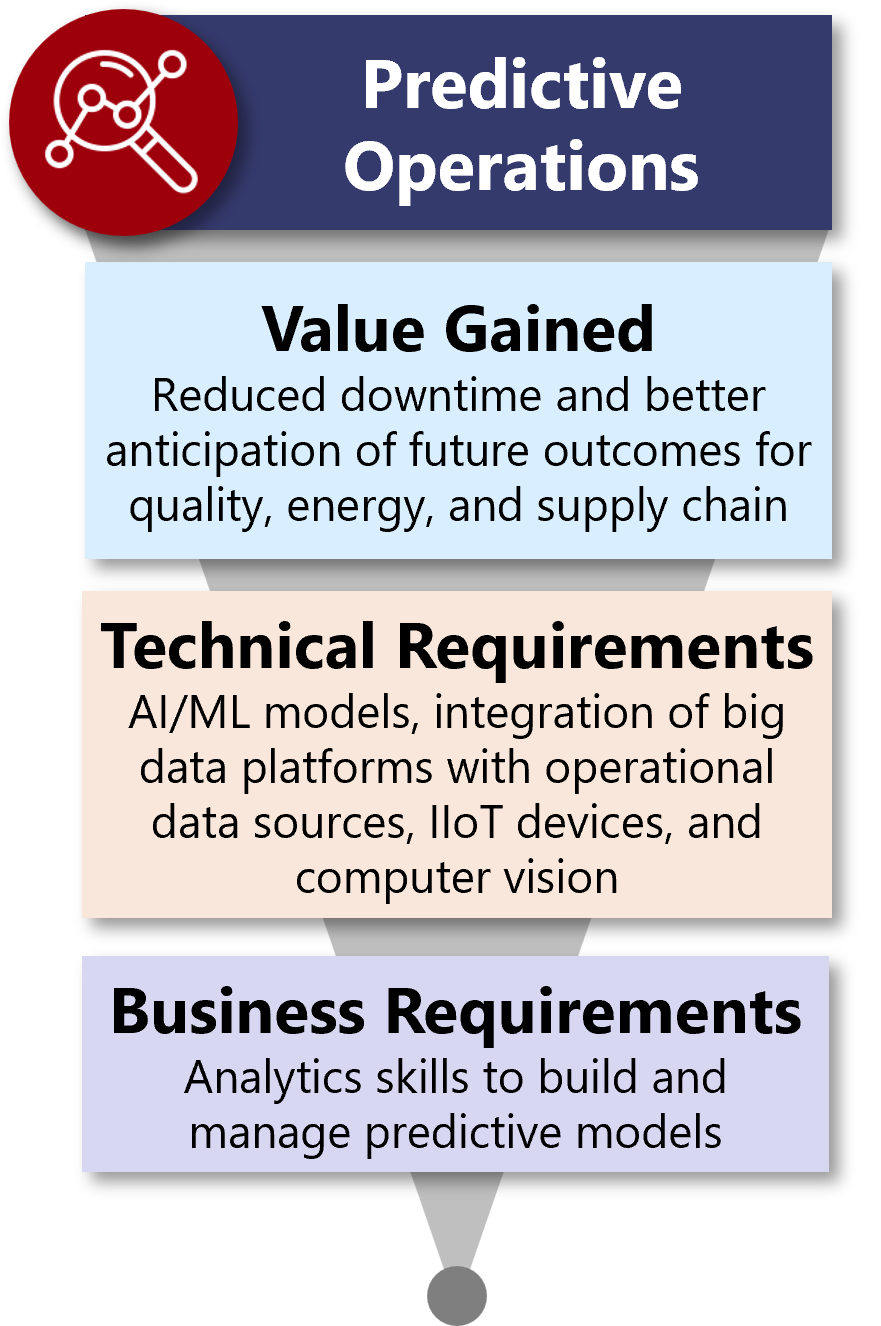

Industry 3.6: Predictive Operations

By the time a manufacturer reaches Industry 3.6, much of the hard work is already behind them. Machines and processes are connected through Industry 3.1. Asset, operator, and production data are digitized through Industry 3.2. Business systems are integrated in Industry 3.3. Scalable infrastructure is in place through Industry 3.4 to support growth and rising complexity. Along the way, data volumes have exploded. Sensors, logs, systems, and transactions are generating streams of information, often in real time and across multiple layers of the operation.

This is where the investment starts to pay off.

Predictive operations is the point where data begins to work for you, not the other way around. Instead of simply reporting what already happened, systems start identifying patterns, trends, and signals that point to what is likely to happen next. Equipment failures can be anticipated before they cause downtime. Quality issues can be detected before scrap is produced. Energy spikes can be forecast before costs escalate. Supply disruptions can be flagged while there is still time to adjust plans.

Most organizations begin this journey with predictive maintenance. It is a logical starting point. The use cases are well understood, the value is measurable, and the return on investment is clear. But Industry 3.6 extends far beyond maintenance. Predictive intelligence spreads across quality, safety, energy, logistics, labor availability, yield optimization, and production planning.

At this stage, prediction becomes embedded into daily operations. Alerts are prioritized based on risk. Decisions are informed by probability, not just thresholds. Teams shift from firefighting to prevention. Data is no longer used only for hindsight or real time visibility. It becomes a source of foresight.

Value Gained:

By the time an organization reaches the stage of predictive operations, it’s not just generating data, it’s drowning in it. From IIoT sensors to MES logs, ERP events, and quality systems, modern manufacturing environments produce vast volumes of information daily. But most of that data sits idle and is logged but unused, visualized but not acted upon.

Predictive operations change that. This is where your data becomes directional. You’re no longer relying on tribal knowledge, gut feel, or lagging reports. Instead, you’re using statistical patterns, machine learning, and real-time signals to forecast what’s about to go wrong, where inefficiencies will appear, and what actions will mitigate risk or boost performance. It transforms your organization from one that reacts to problems to one that anticipates and prevents them, continuously and systemically.

Lower Unplanned Downtime: Reduces costly disruptions and keeps production running smoothly by anticipating and avoiding equipment issues.

Higher Product Quality: Increases consistency and customer satisfaction by identifying problems before they impact the final product.

Optimized Energy Usage: Lowers operational costs and improves sustainability by better aligning energy consumption with production needs.

Stronger, More Resilient Supply Chains: Enhances reliability and responsiveness by identifying and mitigating risks before they impact delivery.

Reduced Firefighting and Faster Decisions: Helps teams act quickly and confidently, shifting from reactive problem-solving to proactive planning.

Improved Workforce Planning: Aligns labor with demand more effectively, leading to better productivity and less stress on staff.

Technical Requirements:

Building predictive operations isn’t just about plugging AI into your factory. It requires rethinking how your data flows, how your systems interact, and how intelligence is created and used. Prediction is not a single tool, it’s the outcome of a carefully built ecosystem that brings together machine data, business context, scalable processing power, and algorithms that learn over time. This means everything from shop floor sensors and computer vision inputs to ERP events and cloud-based model training environments needs to work together; cleanly, contextually, and continuously.

And while it's tempting to see predictive operations as a technical milestone that happens once, the reality is far more iterative. It’s not a dashboard you install, it’s an evolving capability that matures over time. As models are deployed, their accuracy must be monitored, refined, and adapted to changes in processes, equipment, and external factors. For that reason, sustainable predictive capability requires infrastructure, data governance, domain expertise, and the ability to deploy and retrain models at scale.

AI/ML Models Trained on Industrial Use Cases: These can include time-series forecasting, anomaly detection, classification models for quality, or predictive scoring for asset health. Off-the-shelf models won’t cut it, you need models trained on your own data, machines, and context.

Integrated Big Data Platforms Bridging OT and IT: Prediction demands that siloed data becomes unified. This includes SCADA and sensor data, MES transaction logs, ERP order flows, CMMS history, and even supply chain disruptions, all joined in a centralized, searchable, and contextualized platform.

High-Frequency IIoT Devices and Sensors: Machines must be equipped with sensors that generate reliable, real-time data on key parameters like vibration, temperature, pressure, torque, and cycle time. Without sensor fidelity, prediction is noise.

Edge Computing for Localized Analysis: Some models need to run close to the process. This is especially true for low latency, high uptime, or data privacy reasons. That means edge gateways or embedded processors capable of performing analytics and reacting in real time.

Computer Vision Systems for Pattern Recognition: In processes where visual confirmation matters (e.g., inspection, safety, ergonomics), predictive systems can leverage cameras and AI models to recognize failure precursors or unsafe behaviors not detectable with traditional sensors.

ETL Pipelines, Feature Engineering, and Data Enrichment: Predictive models require clean, structured input, not just raw sensor logs. Data must be processed to extract trends, normalize inputs, calculate derivatives, and enrich events with context (e.g., shift, product, operator, line).

ML Ops Tooling for Model Management: Just like you wouldn’t deploy unversioned software, predictive models require a lifecycle: training, validation, deployment, monitoring, retraining. Platforms must support this full lifecycle, with metrics on model drift, accuracy, and versioning.

Data Quality Frameworks and Feedback Loops: Predictions are only as good as the data that feeds them. That means monitoring for missing values, detecting sensor failures, tracking false positives, and feeding outcome data back into the training loop to improve future performance.

Interoperable APIs for System Integration: Predictions must be available where decisions are made, whether that’s in a maintenance dashboard, a scheduling engine, or a QMS system. APIs must allow secure, low-latency delivery of prediction outputs to downstream applications.

Cloud or Hybrid Compute Resources for Model Training and Scaling: Training and running predictive models, especially across large datasets or video feeds, can be resource-intensive. Cloud or hybrid infrastructure ensures scalability without overloading local systems.

Business Requirements:

Predictive operations aren’t just technical, they’re strategic. And they require new business behaviors to succeed. If predictions aren’t understood, trusted, or acted on, they have zero value, no matter how accurate the model is.

Analytics Literacy Across Roles: From data engineers to frontline supervisors, everyone must understand how to read predictions, interpret confidence scores, and make timely decisions based on those insights.

Model Ownership and Governance: Each predictive model should have a clear owner responsible for its performance, retraining cadence, and alignment with business goals, alongside IT and data teams ensuring compliance and risk management.

Action Protocols and Escalation Paths: What happens when a model raises a flag? What’s the SOP for intervening? Organizations need clear response workflows and tools to help teams act with speed, not uncertainty.

Trust-Building Through Transparency: Users trust what they understand. That means showing how predictions are made, validating them regularly, and using false positives or misses to improve both systems and processes.

Cross-Functional Collaboration: Predictive signals often affect multiple functions. A machine anomaly may impact quality, scheduling, and maintenance simultaneously. Teams need to work from a shared source of truth with shared context.

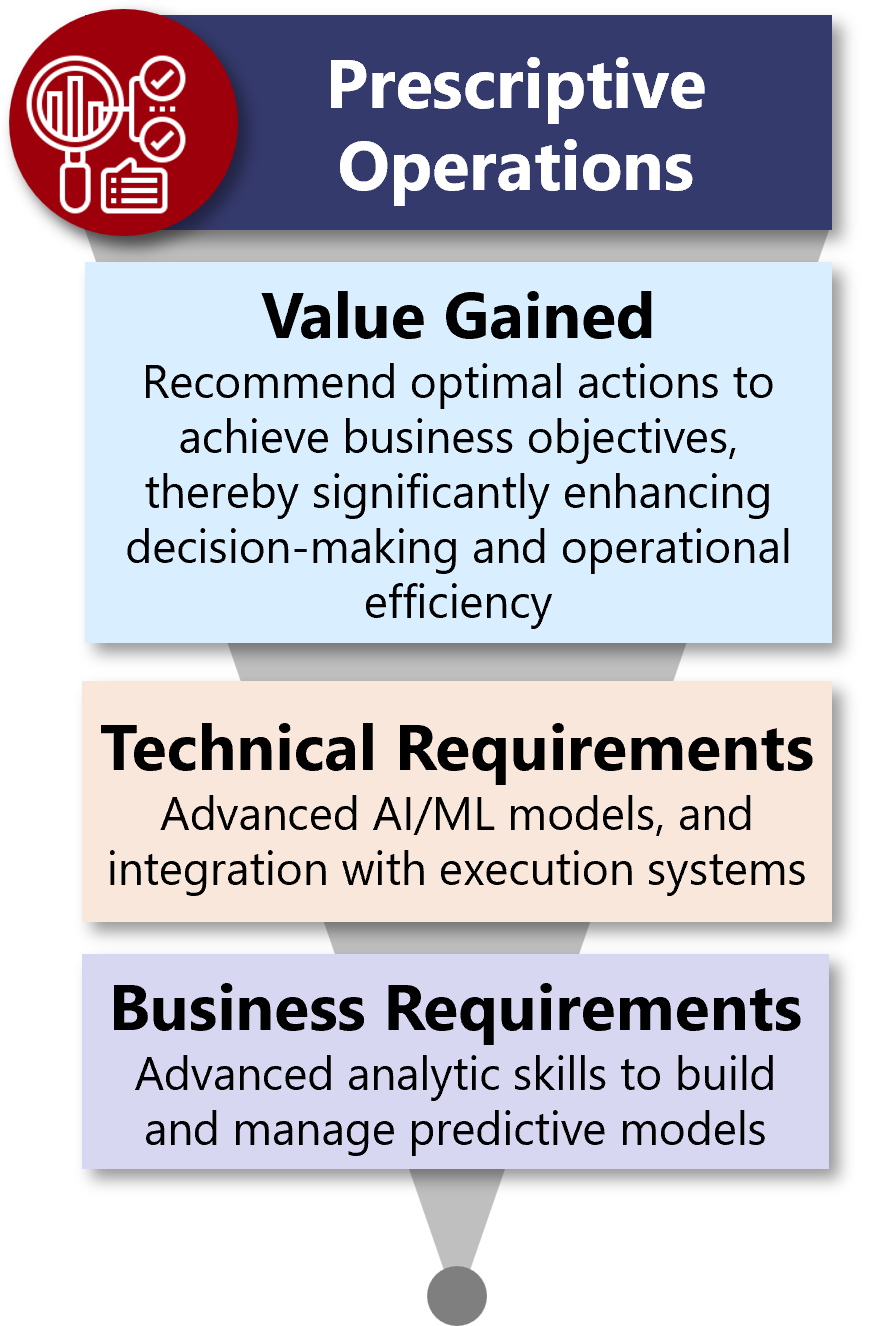

Industry 3.7: Prescriptive Operations

Prescriptive operations represent a natural and necessary evolution from predictive operations. Where predictive systems tell you what’s likely to happen, prescriptive systems go further, they recommend what to do next. This isn’t just alerting you to risk or opportunity; it’s guiding your response based on business priorities, constraints, and available options.

This capability builds on the momentum of earlier stages. The system integration of 3.3 provides shared visibility across business and operations. The scalable infrastructure of 3.4 ensures data and decisions can move quickly and reliably. And the models developed in 3.6 give you accurate forecasts to work from. Now, in 3.7, those inputs are paired with decision logic that translates insight into real-time, goal-aligned recommendations.

Whether it’s rerouting a shipment, adjusting production parameters, or rescheduling maintenance, prescriptive operations deliver targeted, timely actions, minimizing delay, reducing decision fatigue, and improving operational consistency. It's not just about knowing what might happen. It's about always knowing your best next move.

Value Gained:

The real value of prescription is precision under pressure. Where prediction offers insight, prescription delivers confidence in action, especially in fast-moving environments where time, cost, and quality are always competing.

Operational Efficiency: Improves output and productivity by guiding teams with clear, real-time decision options instead of guesswork or outdated procedures.

Better Resource Utilization: Makes smarter use of machines, people, and materials by adjusting plans in response to what’s happening in the moment.

Faster Response to Disruptions: Shortens recovery time by providing immediate, guided actions when things go off track.

Higher Consistency: Creates more predictable performance across shifts and sites by standardizing decisions through shared, objective logic.

Stronger Alignment with Business Goals: Ensures day-to-day decisions support larger objectives, whether that’s lowering costs, boosting quality, or hitting sustainability targets.

Less Decision Fatigue: Frees people from routine problem-solving so they can focus on high-impact work that requires human judgment.

Technical Requirements:

Prescriptive operations build squarely on the data maturity and model infrastructure laid down in 3.6. But while prediction relies on identifying what’s likely to happen, prescription demands you also determine what should happen next, and how that decision gets made and executed.

This means expanding beyond raw data pipelines, sensor networks, and forecasting models. It requires intelligent orchestration: optimization engines that evaluate tradeoffs, APIs that connect to real systems of execution, and governance layers that ensure transparency and trust in system-generated recommendations.

Optimization and Constraint-Solving Engines: Prescription requires logic that goes beyond prediction. Systems must weigh multiple variables (cost, quality, time, capacity, etc.) and apply constraint-based or heuristic optimization to recommend the most favorable action. This includes solvers for scheduling, routing, recipe tuning, and resource allocation.

Prescriptive Rules Engines and Workflow Logic: Not all decisions need AI. Many operational decisions can be driven by codified rules and thresholds that adapt to real-time data. These engines formalize tribal knowledge and business logic into scalable, automated playbooks, executed consistently across shifts and teams.

Action Interfaces with Execution Systems (MES, CMMS, ERP, WMS): A recommendation has no impact if it sits in isolation. Prescriptive systems must interface bi-directionally with operational platforms, triggering schedule changes, maintenance tickets, inventory moves, or recipe updates directly in the systems that execute work.

Decision State Modeling and Escalation Logic: Prescriptive environments require models that understand the state of operations and whether a system, operator, or manager is best suited to act. This includes escalation rules, fallback plans, and logic for when to request human confirmation.

Context-Aware Recommendation Engines: Decisions must be situationally aware. A “slow down machine” prescription might be optimal during a maintenance window, but harmful during a rush order. Engines must account for current priorities, production context, and strategic goals before recommending action.

User Interfaces for Explanation and Override: Operators and managers need more than a recommendation, they need to understand why it was made. Prescriptive systems must offer clear explanations, confidence levels, and the ability to override or adjust recommendations with traceability.

Simulation and What-If Testing Environments: Before rolling out prescription at scale, teams need sandboxes to simulate outcomes. These tools let engineers and planners test the impact of model-driven decisions under different constraints or conditions, building trust before automation.

Decision Logging and Feedback Integration: Every prescriptive action must be logged, evaluated, and compared to actual outcomes. These feedback loops allow the system to learn from past decisions and continuously refine its recommendations for improved future performance.

Alignment Frameworks for Decision Objectives: What does “optimal” mean in your operation? Prescriptive systems require clearly defined objective functions (maximize throughput, minimize cost, balance risk, etc.) that can be dynamically weighted as business conditions evolve.

Business Requirements:

Prescriptive operations raise the bar for organizational capability. You’re no longer just asking “what do we see?” but “what do we do about it, and how fast can we do it?”

Deep analytics and modeling capability: Teams need data scientists and engineers who can design, build, and tune both predictive and prescriptive models, with a clear understanding of operational constraints.

Decision governance and trust frameworks: Stakeholders must understand and agree on what kinds of decisions the system is allowed to make or recommend, and when humans step in.

Operational agility and change readiness: You must be able to take action quickly. This requires teams to be trained, systems to be interoperable, and workflows to be responsive to intelligent input.

Cross-functional alignment around optimization goals: You can’t prescribe effectively if departments are optimizing for different things. Cost, quality, throughput, and sustainability must be balanced intentionally and collaboratively.

Accountability and transparency in outcomes: Prescriptive operations must be auditable, so teams can learn from what worked, what didn’t, and how to improve both models and decisions over time.

Industry 3.8: Product/Service Digitalization

From Smart Operations to Smart Offerings

While earlier Industry 3.x stages focused on internal transformation, optimizing processes, connecting assets, and embedding intelligence, Industry 3.8 turns that capability outward. This is where digital capabilities begin to transform not just how products are made, but what the product is, and how it continues to deliver value after it leaves the factory.

Product/Service Digitalization means embedding connectivity, intelligence, and adaptability into what you offer. It’s a shift from discrete, static products to connected, evolving solutions, whether that’s usage-aware equipment, cloud-connected platforms, or data-driven service extensions.

This stage empowers organizations to deliver richer experiences and extract deeper insights from real-world usage. It often opens the door to new forms of engagement with customers, new service models, and recurring value beyond the point of sale. As these digitalized offerings take shape, they often expose new opportunities, and demands, for rethinking how value is packaged, priced, and delivered.

Value Gained:

Digitalizing your products or services is about extending the impact of everything you’ve built operationally, into the offering itself. Rather than stopping at smart manufacturing, this stage enables smarter products: ones that adapt, communicate, and evolve in the hands of the customer. It shifts your value delivery from a one-time transaction to a continuous relationship that extends throughout the product’s lifecycle.

The benefits here are not just about bells and whistles. They’re about improving reliability, usability, service, and design, all through real-time data and digital interfaces that connect customers, engineers, and service teams like never before. By enabling visibility into how products perform in the field, organizations can respond faster, support better, and innovate more effectively.

What you gain is not a new business model (yet), but a smarter, more resilient way to deliver the core value your products are already meant to provide.

Improved Customer Experience: Delivers more helpful, user-friendly, and responsive interactions through connected features, usage feedback, and digital touchpoints.

Greater Product Usability and Reliability: Enables features like remote diagnostics, usage alerts, and software updates that reduce friction and enhance product value in everyday use.

Faster Product Improvement Cycles: Provides real-world performance data that helps R&D and engineering teams make informed, rapid design enhancements.

Reduced Service Costs: Improves efficiency in support and field service through condition-based maintenance and issue detection before failure occurs.

Enhanced Support Capabilities: Gives service teams greater visibility into how products are used and where problems occur, improving response accuracy and speed.

Technical Requirements:

Delivering digitalized offerings requires a modern, connected, and secure technology foundation that supports real-time communication and continuous improvement.

IoT Connectivity Infrastructure: Devices must reliably connect and communicate across networks, whether via edge, cloud, or hybrid systems.

Cybersecurity and Data Protection: Systems must ensure secure transmission and storage of sensitive customer and usage data.

Advanced Software Development: Embedded software, apps, and platforms must support product logic, updates, and user interfaces.

Scalable Data Management: Continuous data collection requires storage, processing, and integration that supports analytics and feedback.

Business Requirements:

Product/service digitalization is not just a technology initiative, it’s an organizational shift. It demands a new mindset about what your product is, how it evolves, and how value is delivered over time. It also requires collaboration across functions that traditionally worked in sequence. Engineering, IT, product management, marketing, and service now all operating together in a continuous loop of delivery, feedback, and improvement.

Unlike purely physical products, digitalized offerings don’t stop at the point of sale. They require ongoing lifecycle support, customer engagement, and regular updates. This means your teams need the tools, processes, and capacity to think beyond launch and support a product that lives and changes in the field. Digital also demands a sharper customer focus, not just in features, but in usability, support, and overall experience.

Customer-Centric Culture: Teams must prioritize end-user outcomes, focusing on real-world value, usability, and support rather than internal assumptions.

Agile Product Development: Connected offerings require continuous iteration, regular updates, and tight feedback loops, not just long release cycles.

Cross-Functional Coordination: Success depends on synchronized execution across hardware, software, IT, service, and commercial teams.

Support and Service Readiness: Field teams must be equipped to manage connected devices, respond to real-time data, and provide remote assistance when needed.

Industry 3.9: Business Model Transformation

As organizations reach Industry 3.8 and begin embedding intelligence, connectivity, and software into their offerings, the impact extends well beyond technical performance. Products start generating data throughout their lifecycle. Usage patterns become visible. Customer behavior becomes measurable rather than assumed. Support moves from reactive to proactive. Expectations change because customers experience faster response times, better outcomes, and more personalized interactions.

Digitalized products no longer end at the point of sale. They stay connected to the manufacturer long after delivery. This creates a continuous feedback loop between how products are designed, how they are used, and how they are improved. Engineering gains real world insight. Service teams gain early warning signals. Sales gains evidence of value delivered, not just promises made.

This evolution sets the stage for something more profound. It forces organizations to reconsider what they are actually selling. Industry 3.9 is where the digital foundation becomes a platform for business model reinvention. The focus shifts from smarter products to smarter value delivery. Revenue is no longer tied only to units shipped. It expands to uptime delivered, outcomes achieved, and performance guaranteed.

At this stage, companies begin experimenting with subscription models, service based offerings, and outcome driven contracts. Data itself can become a product. Insights can be packaged and sold. Risk can be shared with customers rather than transferred. The relationship changes because incentives change.

This is a move away from transactional thinking. It is about long term engagement rather than one time sales. Value is delivered continuously, not just at delivery. Customers become partners in optimization rather than recipients of equipment. Platforms emerge that connect products, services, partners, and ecosystems.

Making this shift is not easy. It requires new operating models. Success metrics must evolve beyond volume and margin. Teams must collaborate across product, software, service, finance, and customer success. Partnerships become strategic rather than transactional.

Industry 3.9 is where digital capability turns into strategic leverage. It is not just about doing things better. It is about doing fundamentally different things, and competing in ways that were not possible before.

Value Gained:

The transformation of your business model isn't just a byproduct of digital capability, it’s the strategic pivot that allows your organization to grow in new directions. As offerings become more connected and intelligent, and as data becomes more integral to value delivery, companies find themselves able to do more than just enhance what they sell, they can redefine what their business actually is.

Business model transformation allows organizations to shift from selling products to delivering outcomes, to move from one-time revenue to recurring models, and to evolve from independent product lines to integrated ecosystems. It opens the door to services that scale differently than manufacturing ever could, and partnerships that create value beyond the boundaries of a single enterprise.

It also enables more resilient revenue streams that are less sensitive to cyclicality, commoditization, or margin pressure, because customers are paying for value experienced, not just goods received. Perhaps most importantly, it positions companies to be not just suppliers, but strategic partners in their customers' success.

This isn’t about doing better business within an old model. It’s about doing new business, and doing it in a way that’s more agile, more aligned, and more defensible over time.

New Value Propositions: Offer outcomes and experiences instead of just physical products, tailored to evolving customer expectations.

Expanded Revenue Streams: Move beyond transactional sales into recurring revenue through services, subscriptions, usage-based pricing, or ecosystem participation.

Flexible Value Delivery Models: Enable hybrid offerings that blend product, service, and digital experiences, customized by customer segment or use case.

Deeper Customer Relationships: Build ongoing engagement through connected offerings, real-time data feedback, and continual service evolution.

Stronger Competitive Differentiation: Create defensible advantages not through the product alone, but through how it is offered, supported, and monetized.

Technical Requirements:

Transforming a business model requires not just vision, but a technology foundation capable of supporting it across multiple systems, partners, and delivery channels.

Advanced Integration Capabilities: Systems must work across product, service, and financial platforms to support new pricing models, entitlement tracking, and performance-based billing.

Flexible and Scalable Architectures: Infrastructure must be adaptable to new digital services, ecosystem expansion, and evolving customer demands without costly rework.

Cloud-Native and API-First Platforms: Digital business models rely on agility and openness, meaning modular systems that integrate with partners, channels, and third-party providers easily.

Unified Data Models and Customer Views: A holistic understanding of product use, customer behavior, and service delivery is essential to drive outcome-based offerings.

Commerce and Subscription Management Tools: Tools that can support trials, renewals, metered billing, upgrades, and service tiering are critical for sustainable revenue operations.

Business Requirements:

Business model transformation is not a side project, it’s a reinvention. It changes how value is created, how success is measured, and how nearly every team in the organization operates. Whether you’re adding digital services, offering data insights, layering in software, or moving toward outcome-based contracts or product-as-a-service, each path alters the company’s structure, incentives, and identity.

This is often one of the most difficult phases of transformation because it goes beyond technology or product. It calls into question sales models, revenue recognition, compensation plans, support structures, and customer engagement strategies. When you stop selling a product and start selling uptime, output, or intelligence, your entire operating logic shifts. What used to be a handoff becomes an ongoing relationship. What used to be “support” becomes “success.”

In many cases, this means that most people’s roles evolve, whether that’s how they contribute to value, how they collaborate across functions, or how they’re held accountable. It requires courageous leadership, cross-functional orchestration, and a long-term commitment to shifting both mindsets and mechanics.

Strategic Planning and Execution: A clearly defined transformation roadmap, championed by leadership and aligned to measurable, long-term objectives.

Change Management and Organizational Readiness: Structured programs to help employees understand, accept, and thrive in the new model, including training, communication, and cultural shifts.

Cross-Functional Operating Models: Traditional silos must be reconfigured to enable seamless collaboration across product, service, finance, IT, sales, and customer success teams.

Ecosystem and Partnership Management: As offerings evolve, value delivery increasingly depends on coordinated networks of suppliers, integrators, service providers, and tech partners.

New Financial Structures and Incentives: Revenue recognition, pricing strategies, sales commissions, and KPIs must all be realigned to support recurring, shared-risk, or performance-based models.

Customer Lifecycle Ownership: The organization must shift toward continuous value delivery, requiring robust customer success strategies, service design, and long-term relationship management.

Governance and Risk Management: New business models introduce new liabilities, regulatory considerations, and IP challenges, demanding updated policies and cross-disciplinary governance.

Industry 4.0: Autonomous & Self-Optimizing

This is the point where Industry 4.0 stops being aspirational and starts being operational. After years of investing in connectivity, data, integration, and infrastructure, the system is finally capable of running itself in meaningful ways. This is not about experimentation anymore. It is about production scale autonomy.

At this stage, AI moves beyond dashboards and recommendations. It moves into control. Decisions that once required human review are now made automatically, within defined limits. Actions that used to wait for approval are executed in real time. The system does not just advise. It acts.

This is where the shift from decision support to autonomous control happens. Predictive models no longer just flag risks. Prescriptive logic no longer just suggests responses. Agentic AI systems take ownership of outcomes. They monitor conditions, evaluate options, select actions, and learn from the results. All of this happens continuously.

Agents operate with clear goals and constraints. One agent may manage throughput. Another may balance quality and yield. Another may optimize energy usage or delivery commitments. They coordinate across systems rather than operating in isolation. MES, planning, quality, maintenance, and supply chain stop behaving like separate tools and start behaving like a single operating system.

Humans are still involved, but their role changes. They define objectives, boundaries, and policies. They monitor performance and intervene by exception. They focus on improvement, governance, and accountability rather than moment to moment decisions. Control shifts from manual execution to oversight of autonomous behavior.

This level of autonomy only works because everything underneath it is stable. Data is trusted. Systems are integrated with shared meaning. Infrastructure can handle real time decisions at scale. Business rules are explicit. Without those foundations, autonomous control creates risk instead of value.

This is why autonomy consistently sits at the top of the most rigorous maturity frameworks. In models like the Acatech Industrie 4.0 Maturity Index and the Smart Industry Readiness Index, autonomy is the highest level across every dimension. Not because it is flashy, but because it is hard. It requires discipline across technology, process, and organization.

At this point, AI is no longer a feature you add to a system. It becomes the system. It coordinates tradeoffs faster than people can. It reacts to variability without waiting for meetings. It adapts as conditions change rather than following static rules.

This is not about removing people from manufacturing. It is about removing latency. Decisions happen when they are needed, not when someone is available. The operation becomes more resilient, more responsive, and more consistent.

Industry 4.0 at this level is not the end of the journey. It is the point where the journey runs continuously on its own.

Value Gained:

When machines and systems can learn, adapt, and make decisions on their own, the role of human intervention fundamentally changes. The organization no longer depends on individuals to constantly interpret data, adjust processes, or respond to unexpected changes. Instead, those tasks are offloaded to a learning system that can handle complexity faster, with greater precision, and at a scale that human teams simply can’t match.

This enables a new level of speed, resilience, and intelligence, not just within isolated processes, but across entire production systems, supply chains, and service ecosystems. With AI driving operations moment to moment, businesses can respond to volatility instantly, recover from disruptions autonomously, and pursue performance improvements continuously. It’s not just about doing more with less, it’s about doing more than was previously possible.

And because these systems get smarter over time, every action they take compounds into a long-term competitive advantage, making each cycle faster, more accurate, and more aligned with business goals.

End-to-End Self-Optimization: Systems continuously monitor, analyze, and adjust operations to meet evolving performance targets, without requiring manual reprogramming or intervention.

Increased Speed and Resilience: Autonomy enables faster response to variability, demand shifts, and disruptions, allowing systems to self-correct in real time.

Reduced Operational Overhead: Frees up human resources by automating routine analysis and control, allowing people to focus on creative, strategic, and exception-driven work.

Improved Consistency and Precision: AI-driven systems reduce variance, ensure adherence to best practices, and deliver repeatable quality at scale.

Continual Learning and Improvement: Every cycle, decision, and data point becomes fuel for learning, enabling ongoing performance optimization with no plateau.

Strategic Advantage That Scales: Autonomy creates an operational model that not only sustains itself, but scales with minimal marginal cost, turning adaptability into a core business asset.

Technical Requirements:

At the core of full autonomy is intelligence, not just automation, but systems that think, reason, and evolve. What distinguishes Industry 4.0 from previous stages isn’t connectivity or visibility, it’s the central role of AI as the operational brain. Autonomy means your system is learning from experience, optimizing in real time, and making decisions without being explicitly programmed for every scenario.

To make this possible, a suite of AI technologies must operate together, each bringing unique strengths to sensing, interpretation, prediction, planning, and control. These are not bolt-ons; they are the fabric of an autonomous system.

Reinforcement Learning and Self-Optimizing AI: Reinforcement learning allows systems to improve through experience. Models observe outcomes, adjust actions, and optimize against goals like throughput, quality, energy, or cost. Over time, performance improves without manual retuning.

Agentic AI Systems: Autonomy requires agents, not just models. Agentic AI systems are goal-driven entities that sense conditions, reason across constraints, take action, and replan when the environment changes. These agents must share context across systems, machines, and processes to act coherently. Technologies like Model Context Protocol enable agents to access consistent operational state, history, constraints, and objectives, allowing them to coordinate decisions rather than operate in isolation.

Generative AI for Dynamic Planning and Simulation: Generative AI enables autonomous systems to handle situations they have not seen before. These models generate plans, workflows, and responses when predefined rules or historical patterns are insufficient. They support scenario reasoning, what-if analysis, and adaptive sequencing in complex, fast-changing environments.

Machine Learning at Scale: Autonomous operations depend on many models running continuously. Predictive maintenance, anomaly detection, yield optimization, energy forecasting, and quality prediction must operate in parallel across large datasets. Models must retrain, validate, and evolve over time.

Multimodal AI Integration: Autonomy requires understanding, not just data. Multimodal AI combines sensor readings, machine signals, vision, audio, text, logs, and alarms into a unified view of the operating environment. This enables richer situational awareness and more confident decisions.

Digital Twin Ecosystems: Creates real-time, high-fidelity virtual environments that allow AI systems to simulate actions before deploying them, reducing risk and accelerating adaptation.

Real-Time Edge Computing: Delivers low-latency inference and decision-making close to the source, critical for autonomous action in fast-moving or disconnected environments.

Cyber-Physical Systems: AI decisions must translate directly into physical action. Tight integration between software intelligence and equipment control ensures actions are executed, verified, and corrected in real time.

Autonomous Control and Execution Loops: Systems must be architected to perceive, decide, and act continuously, enabling full-loop automation without human-in-the-loop dependencies.

Scalable AI Infrastructure and Governance: When AI becomes the operating core, infrastructure and governance are non-negotiable. Organizations need platforms for training, deployment, monitoring, and accountability to ensure autonomy remains reliable, safe, and trusted.

Business Requirements

Most businesses are built for control, predictability, and manual intervention. Autonomous operations demand a different model: trust in learning systems, tolerance for dynamic behavior, and teams prepared to intervene only when it truly matters. It changes what people do, how value is created, and how the organization defines success. Without this cultural and structural evolution, even the most advanced technical capabilities will stall.

AI Governance and Ethics Frameworks: Organizations must establish clear policies for transparency, accountability, fairness, and explainability in AI decision-making, especially in critical or regulated environments.